ELO Rating

We've started testing of rating each player by ELO Rating system.

You can find this Rating in the Statistics section - http://sudokucup.com/node/2245

Performance isn't measured absolutely; it is inferred from wins, losses, and draws against other players. A player's rating depends on the ratings of his or her opponents, and the results scored against them. The relative difference in rating between two players determines an estimate for the expected score between them.

A player's expected score is his probability of winning plus half his probability of drawing. Thus an expected score of 0.75 could represent a 75% chance of winning, 25% chance of losing, and 0% chance of drawing. On the other extreme it could represent a 50% chance of winning, 0% chance of losing, and 50% chance of drawing. The probability of drawing, as opposed to having a decisive result, is not specified in the Elo system. Instead a draw is considered half a win and half a loss.

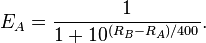

If Player A has true strength R_A and Player B has true strength R_B, the exact formula (using the logistic curve) for the expected score of Player A is

Also note that E_A + E_B = 1. In practice, since the true strength of each player is unknown, the expected scores are calculated using the player's current ratings.

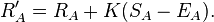

When a player's actual tournament scores exceed his expected scores, the Elo system takes this as evidence that player's rating is too low, and needs to be adjusted upward. Similarly when a player's actual tournament scores fall short of his expected scores, that player's rating is adjusted downward. Elo's original suggestion, which is still widely used, was a simple linear adjustment proportional to the amount by which a player overperformed or underperformed his expected score. The maximum possible adjustment per game, called the K-factor, was set at K = 10 for masters and K = 15 for weaker players.

Supposing Player A was expected to score E_A points but actually scored S_A points. The formula for updating his rating is

Most accurate K-factor

The second major concern is the correct "K-factor" used. The chess statistician Jeff Sonas believes that the original K=10 value (for players rated above 2400) is inaccurate in Elo's work. If the K-factor coefficient is set too large, there will be too much sensitivity to just a few, recent events, in terms of a large number of points exchanged in each game. Too low a K-value, and the sensitivity will be minimal, and the system will not respond quickly enough to changes in a player's actual level of performance.

Elo's original K-factor estimation was made without the benefit of huge databases and statistical evidence. Sonas indicates that a K-factor of 24 (for players rated above 2400) may be more accurate both as a predictive tool of future performance, and also more sensitive to performance.

Certain Internet chess sites seem to avoid a three-level K-factor staggering based on rating range. For example the ICC seems to adopt a global K=32 except when playing against provisionally rated players. The USCF (which makes use of a logistic distribution as opposed to a normal distribution) has staggered the K-factor according to three main rating ranges of:

Sudokucup uses the following ranges:

K = 25 for a player new to the rating list until s/he has completed events with a total of at least 30 games.

K = 15 as long as a player's rating remains under 2400.

K = 10 once a player's published rating has reached 2400, and s/he has also completed events with a total of at least 30 games. Thereafter it remains permanently at 10.

More you can find in the section of Wikipedia here:

http://en.wikipedia.org/wiki/Elo_rating